Project 5: Fun with Diffusion

Part A: Fun with Duffision.

Part 1: Sampling Loops

1.1. Implementing the Forward Process

A key part of diffusion is the forward process (i.e. introducing noise to an image). For this part, we take the image of the Berkeley Campanile and incrementally add noise to it using:

\[x_t = \sqrt{\bar\alpha_t} x_0 + \sqrt{1 - \bar\alpha_t} \epsilon \quad \text{where}~ \epsilon \sim N(0, 1)\]Here are the results at noise levels [0, 250, 500, 750]:

| Original | 250 | 500 | 750 |

|---|---|---|---|

|

|

|

|

1.2 Classical Denoising.

For comparison to later methods, we’ll present the results of classical denoising using low-pass filter here:

| 250 | 500 | 750 |

|---|---|---|

|

|

|

|

|

|

1.3 One-Step Denoising.

For this part, we’ll use a pretrained U-Net to denoise our image. Notice the results are much better despite a bit of unwanted hallucination at higher noise levels:

| 250 | 500 | 750 |

|---|---|---|

|

|

|

|

|

|

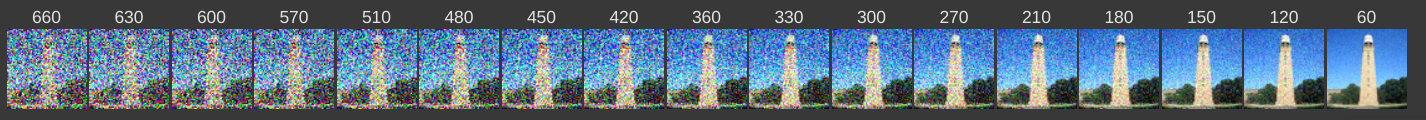

1.4 Iterative Denoising

To achieve better result, we apply UNet denoising multiple times. Here’s the results at each timestep:

For comparison, we include previous results here:

| Gauss | OneStep | Iterative |

|---|---|---|

|

|

|

1.5 Diffusion Model Sample.

To sample from the diffusion, we iteratively denoise pure noise. Here are 5 samples:

| 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|

|

|

|

|

|

1.6 CFG.

To achieve better samples, we use a mix of conditional/unconditional sampling. Here are 5 samples with CFG scale of 7:

| 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|

|

|

|

|

|

1.7. Image2Image Translation.

| i_start=1 | i_start=3 | i_start=5 | i_start=7 | i_start=10 | i_start=20 |

|---|---|---|---|---|---|

|

|

|

|

|

|

1.7.1 Editing Hand-Drawn and Web Images.

| i_start=1 | i_start=3 | i_start=5 | i_start=7 | i_start=10 | i_start=20 |

|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1.7.2 Inpainting.

| Original | Mask | Inpainting |

|---|---|---|

|

|

|

|

|

|

1.7.3 Text-Conditioning.

| i_start=1 | i_start=3 | i_start=5 | i_start=7 | i_start=10 | i_start=20 |

|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

1.8. Visual Anagrams

| Old man | Campfire |

|---|---|

|

|

| Old man | Barista |

|---|---|

|

|

| Old man | Dog |

|---|---|

|

|

1.9 Hybrid Images.

| Hybrid of Skull and Waterfall |

|---|

|

| Hybrid of Skull and Dog |

|---|

|

| Hybrid of Skull and CampFire |

|---|

|

Part B: Fun with Duffision.

Part 1: Training Single-Step Denoising UNet.

1.2. Forward

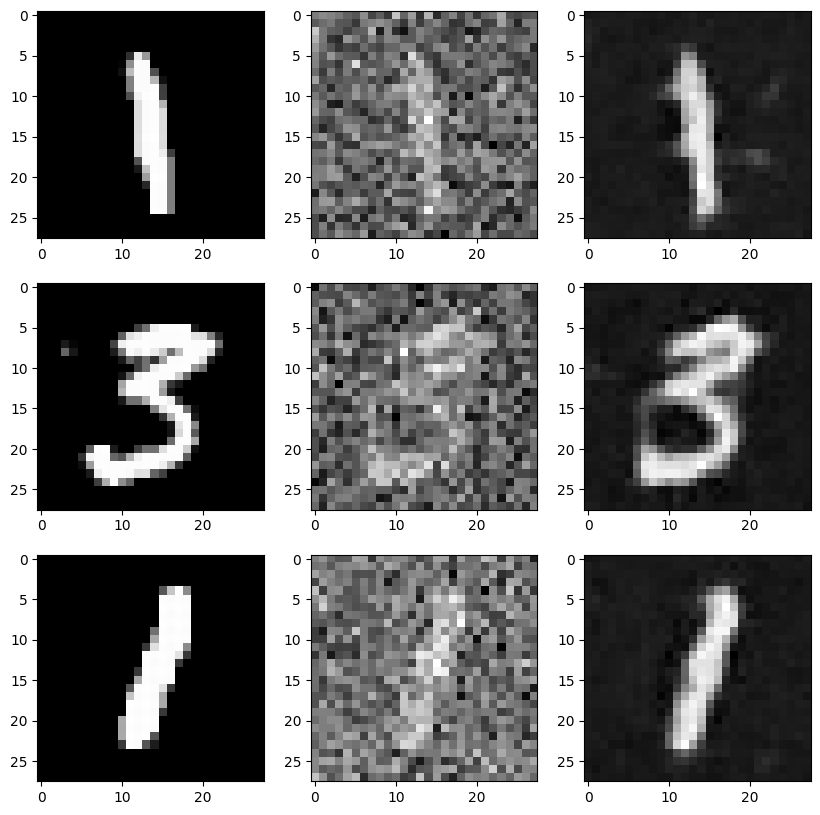

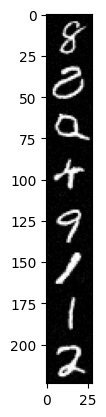

First, we add implement a forward (adding noise) process. Here’s the result of adding increasing noise to the original image:

1.2.1 Training.

Now, we train our UNet to denoise an image with noise level sigma=0.5 applied to it.

Here’s our loss curve:

and here’s our results denoising at epoch 5:

and at epoch 1:

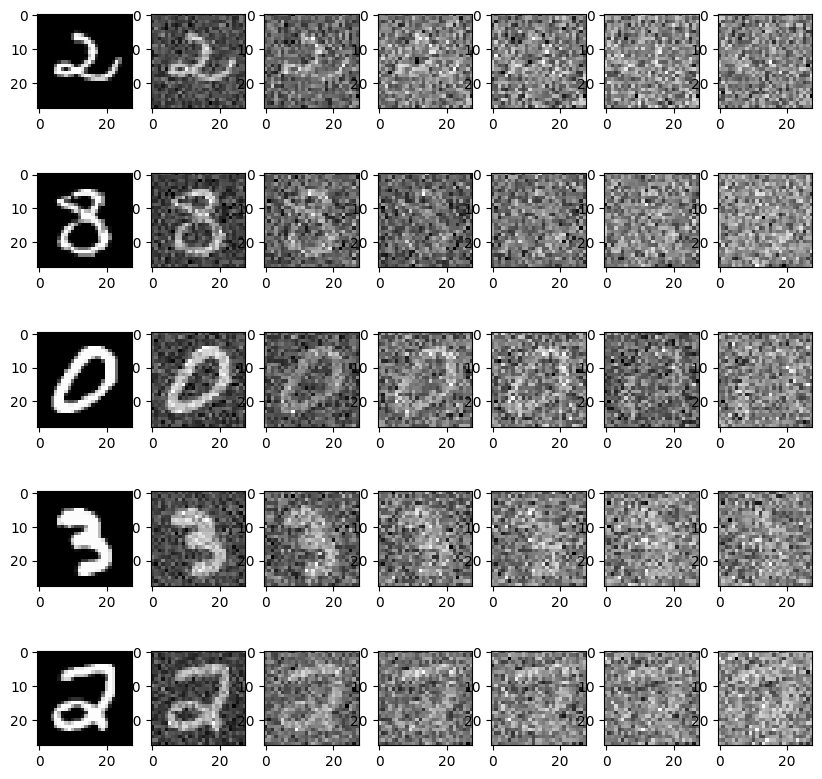

1.2.2 Out-of-Distribution Testing.

We’ll now try to apply our UNet on noise levels it wasn’t trained for. Here are the results for noise levels [0.0, 0.2, 0.4, 0.5, 0.6, 0.8, 1.0]:

Notice the denoising quality gets progressively worse.

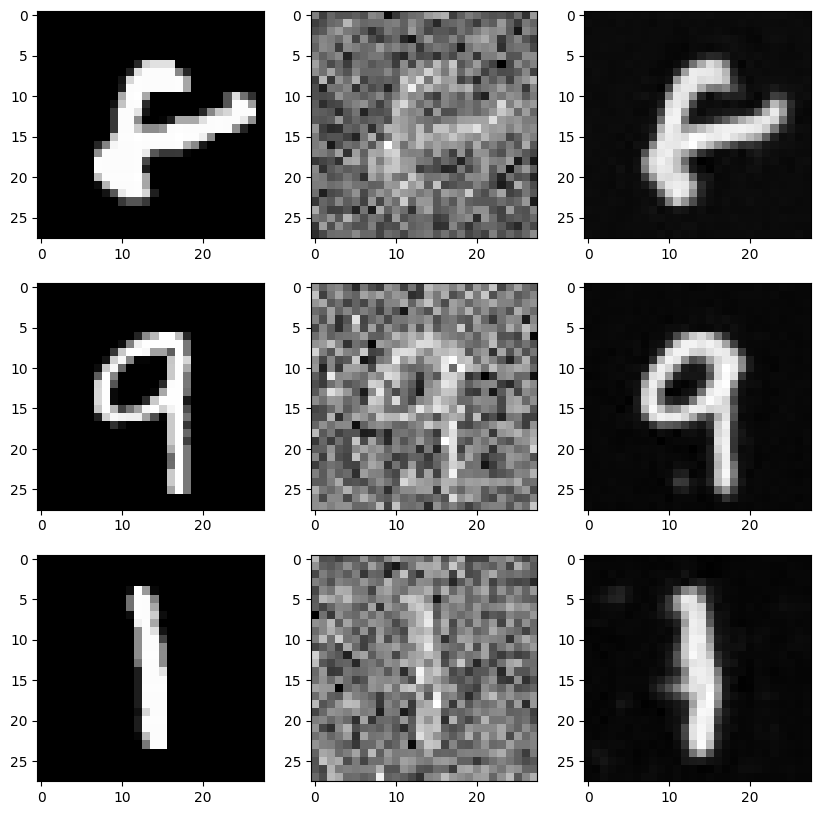

Part 2: Training a Diffusion Model.

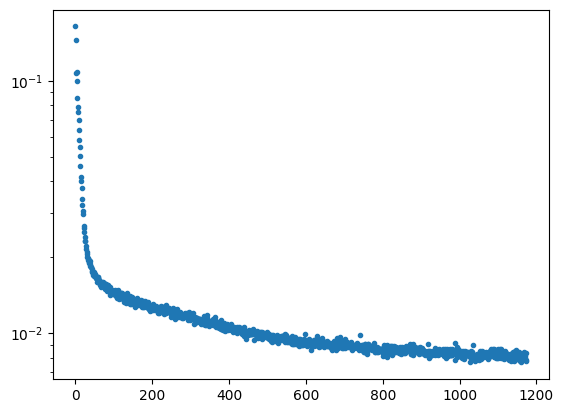

2.1 Time-Conditioning.

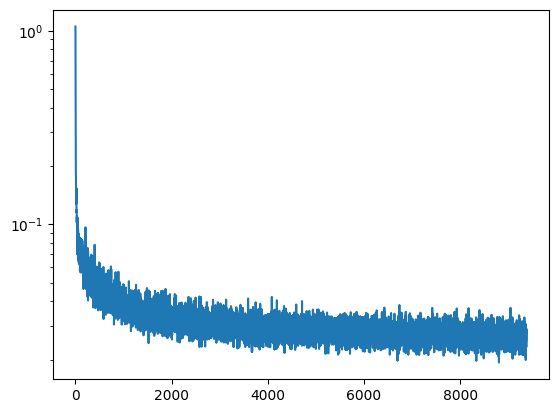

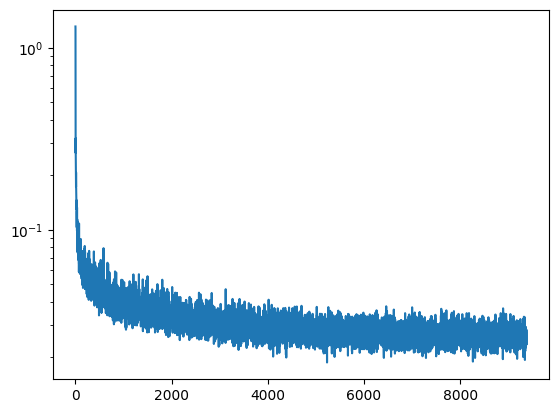

We train our UNet now with time-conditioning. Here’s the loss curve:

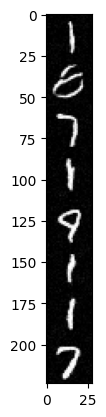

Here’s our results sampling from the time-conditioned UNet at certain epochs:

| Epoch 5 | Epoch 20 |

|---|---|

|

|

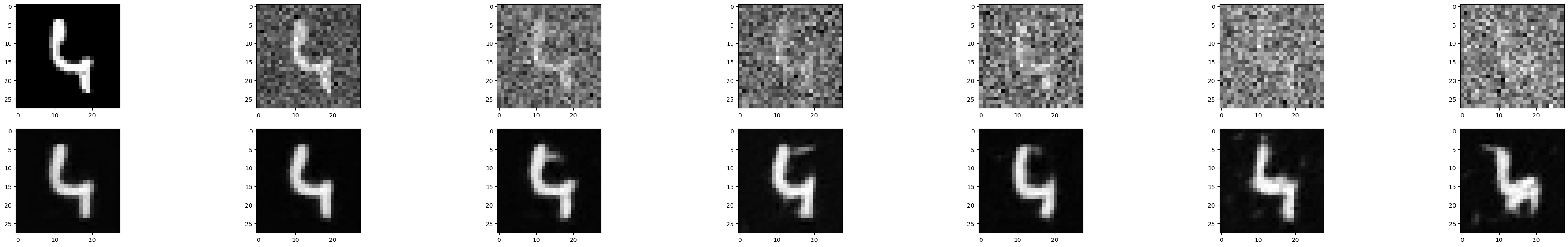

2.4 Class-Conditioning

Now we train our UNet with class-conditioning (on top of time-conditioning). Here’s the loss curve:

Here’s our results sampling from the class-conditioned UNet at certain epochs:

| Epoch 5 | Epoch 20 |

|---|---|

Bells & Whistles

Here’s our gifs for time-conditioned UNet

| Epoch 1 | Epoch 5 | Epoch 20 |

|---|---|---|

|

|

|

and class-conditioned UNet

| Epoch 1 | Epoch 5 | Epoch 20 |

|---|---|---|

|

|

|